Brain2Text: An AI Model That Maps Brainwaves to Text

Abstract:

In today's rapidly evolving technological landscape, there exists a profound need to bridge the communication gap for indivisuals who face speech impediments. There are many people in the society who struggle to communicate, face challenges in expressing thoughts, and finds it difficult to engage in conversion due to aphasia. Our project is a contribution to help these people have communication with ease.

It is well established that as a person thinks then corresponding to their thoughts, specific brainwaves are generated. These brainwaves follow a particular pattern and they carry an essence of the thoughts. This phenomenon has inspired us to extract and decipher these brainwaves in order to decode the intended message. Hence this project offers a convergence of neuroscience and aritificial intelligence in order to bridge the communication gap.

The fundamental ambition of this project is to harness the capabilities of AI techniques to empower individuals with speech impediments to communicate effortlessly. The ultimate goal is to provide these people with some means of communication.

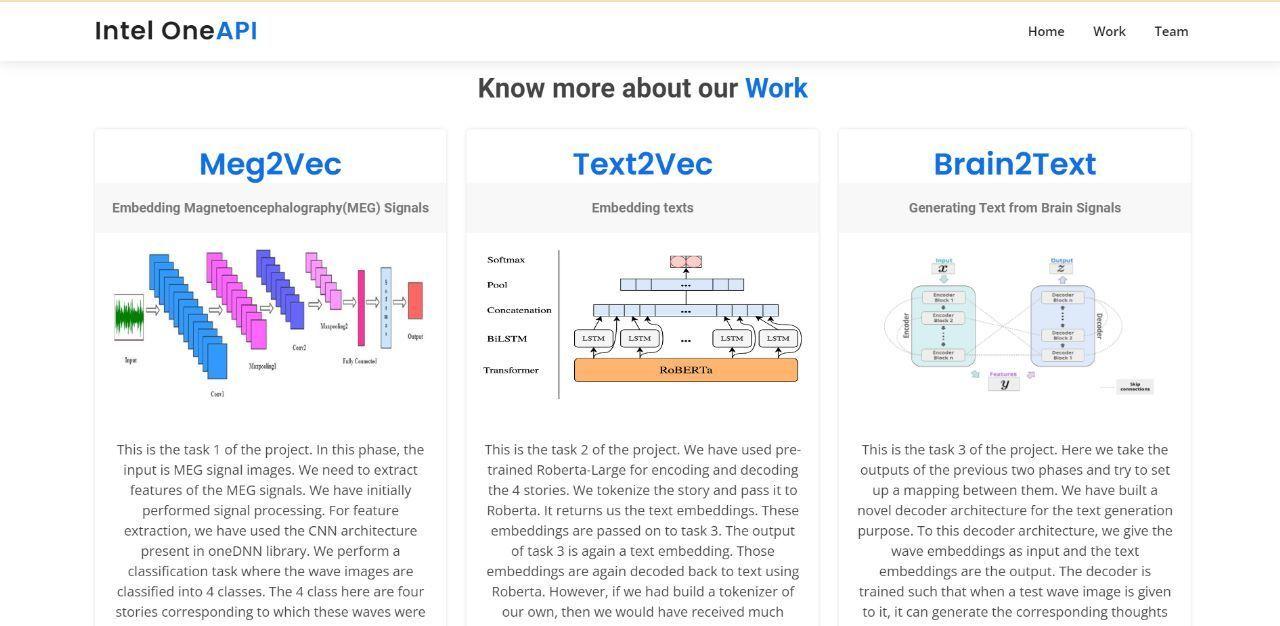

The project is divided into three portions: MEG2VEC, TEXT2VEC and BRAIN2TEXT. MEG2VEC corresponds to converting Magnetoencephalography (MEG) signals to a vectorized form. TEXT2VEC corresponds to converting text to vectorized form. BRAIN2TEXT corresponds to the mapping of MEG vectors to text vectors. To solve these three steps, we have used CNN from the oneDNN library for MEG2VEC, Roberta for TEXT2VEC and a novel decoder architecture for BRAIN2TEXT.

Inspiration:

University of California, San Francisco has started this study and their paper has been published in The New England Journal of Medicine:

https://developer.nvidia.com/blog/transforming-brain-waves-into-words-with-ai/

https://www.nejm.org/doi/full/10.1056/NEJMoa2027540?query=featured_home

Recently Meta has also started working on this and a very recent paper from them is:

https://hal.science/hal-03808317/file/BrainMagick_Neurips%20%283%29.pdf

They have simply found out a correlation between audio waves and brainwaves.

Our Contributions to the Society:

✅ A contribution to speech impaired people of the society: This project provides a groundbreaking solution that leverage deep learning techniques to bridge the communication gap for individuals with speed impediments and providing them an alternative means of expressing themselves. This project could ease the frustration and emotional burden often experienced by speech impaired individuals. This will enable people suffering from paralysis, aphasia or any speech impediments to have communication with ease.

✅ A contribution to medical research: This project would be a huge advancement in the field of neuroscience and linguistics using AI. This will help doctors and medical researchers gain new insights into the relationships between brain wave patterns and linguistic expressions.

✅ A contribution to mental health: This project can be used to read minds of people suffering from Dissociative Identity Disorder, Post Trauma Stress Disorder, and Sleep Disorders and potentially finding a cure to such diseases.

✅ A contribution to education: This project also contributes to education since this will enable talented professors with communication disabilities to deliver lectures much better. This will also enhance educational experiences for students with disabilities, fostering better engagement and learning.

✅ Law Enforcement and Forensic Research: In forensic science, this project can potentially assist law enforcement agencies in understanding the thought processes and intentions of suspected criminals.

✅ Medical Care: This project can be used to decipher brainwaves of comatose patients which will help medical caregivers to gain better insights into their patients cognitive state and hence establish a form of rudimentary communication with the patient resulting in better care giving.

Future Prospect:

The future idea of our project is to create a wearable device that resembles headphones. The core functionality of the device involves reading brainwave, converting them into text and using a voice assistant to read the text. Developing the hardware for such a wearable device involves several challenges. It requires expertise in sensor technology and electronics. Partnering with companies experienced in hardware architecture and wearable technology can accelerate the development process. These companies can bring their knowledge of designing compact and functional hardware components to enhance this project.

To ensure the medical effectiveness and safety of our device, collaboration with medical professionals is crucial. Neuroscientists and medical researchers can provide guidance on the interpretation of brainwave and the relevance of the results obtained. Their expertise can help us refine our device's algorithms and make sure that the device is safe to wear. The potential impact of this project goes beyond medical research. If successful, the brainwave-reading wearable could have applications in various fields such as education, communication, and assistive technology. It will provide indivisuals having speech impediments with some means of having communication.

Limitation:

-

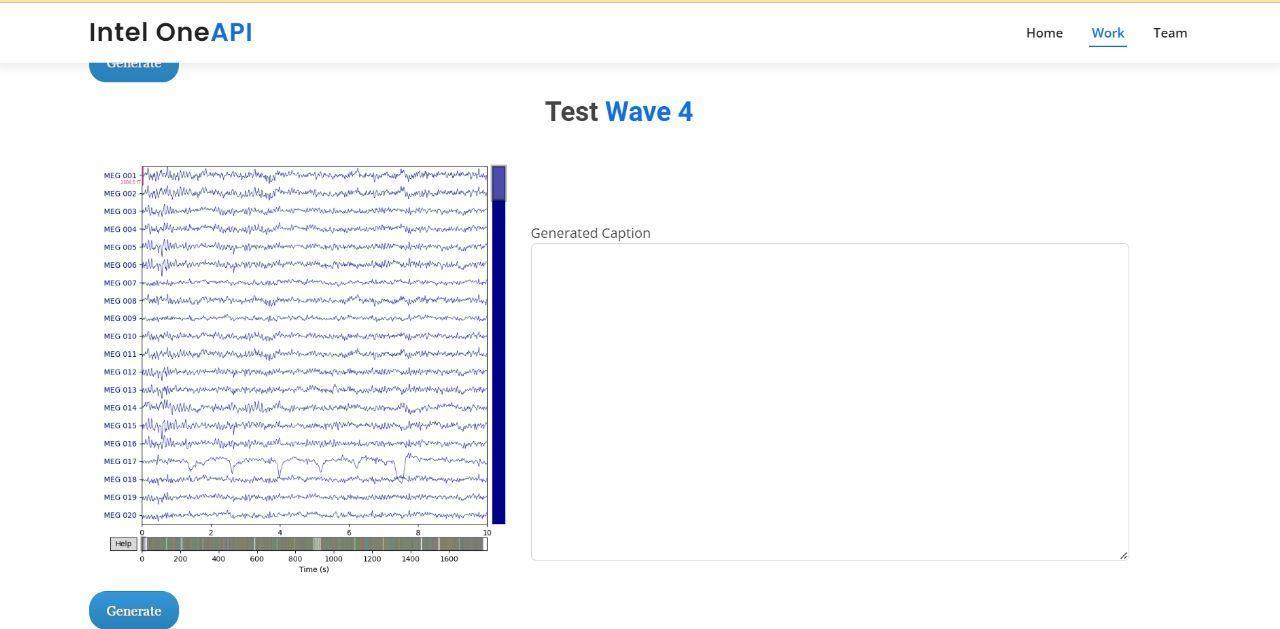

Due to super time constraint, we are unable to build a tokenizer of our own. Although, we have a novel decoder architecture, but the architecture is using pre-trained embeddings from Roberta. Due to this reason, our outputs are specific to Roberta style of embeddings which is not always meaningful to the end user. To resolve this issue, we have to build a tokenizer of our own.

-

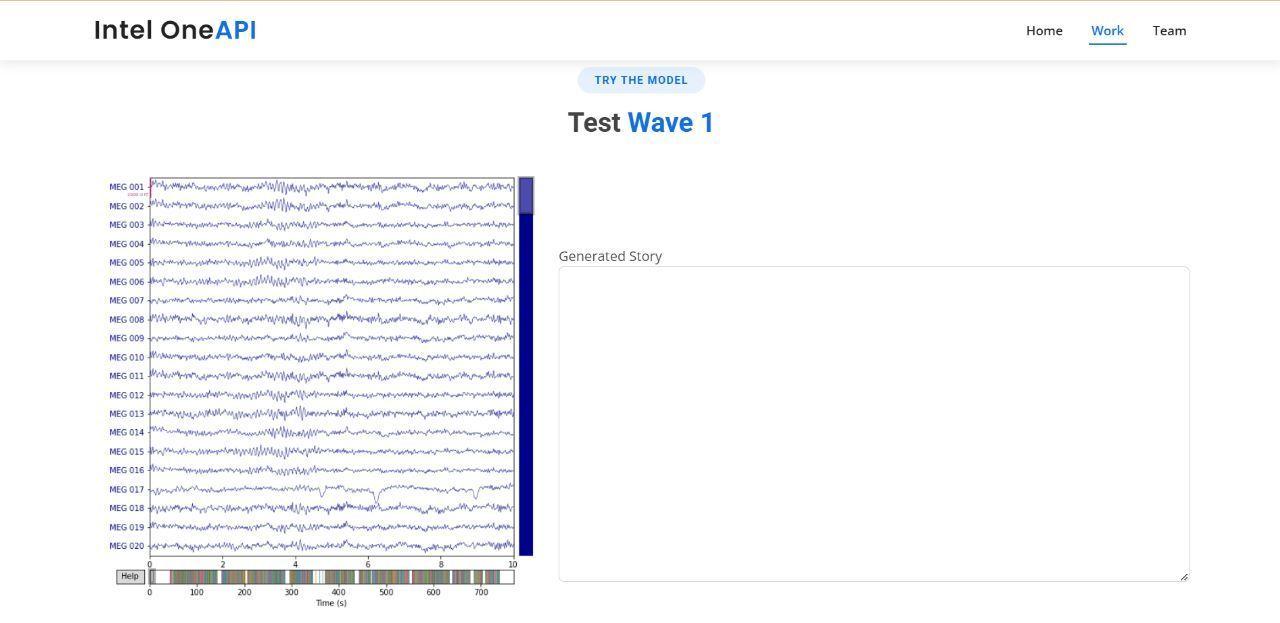

Since we have brainwaves corresponding to long stories, each datapoint is huge in length. Models find it difficult to learn from datapoints having long seperated dependencies. Rather, if we had conversational data and their corresponding waves, then model learning would have been far better since the datapoints are shorter in length.

Steps for improvement:

-

Curating a proper dataset: The model that performs thoughts extraction from brainwaves will perform best if trained with conversational data and their corresponding brainwaves. This is because conversational data are shorter in length and hence shorter waves would be generated. Lerning patterns from shorter waves and shorter texts would be much easier to the model.

-

Proper Data Type: Instead of doing this task based on MEG images, we can obtain better performance if performed on MEG readings. This is because, we definitely loose some amount of information while extracting features from images. Hence, having the MEG reading would perform better since they are raw numerical data. There would be no need to perform information extraction.

-

Medical Guidance: Collaborating with doctors and medical researchers is of utmost importance for the success of this project.

-

Segmentation: In case we have to deal with MEG images only, we can have an additional step in the preprocessing of images i.e., segmentation. Instead of having 20 MEG waves in one image, we can segment the image into 20 parts, each part having an indivisual image. This step would help CNN focus on indivisual waves and extract better information with minimal loss. Due to time contraints, we unable to perform this step but we would definitely do it in the future.

-

Tokenizer: Having a corpus of our own and developing a tokenizer from scratch is very important for smooth encoding and perfect decoding of texts. Again due to time constraints, we were unable to perform this step.

My Learning from oneAPI:

✅ Using IDC (Intel Dev Cloud): This platform has provided us with cloud which we can easily connect with our localhost and use the GPUs present in the servers. This has helped us train our models really fast hence saving our time and allowing us to focus more on improving our work.

✅ Using oneDNN: This library contains optimised versions of neural networks like ANN, RNN and CNN. Since we had to extract features from images, we have used the CNN architecture supported by this library. This has helped us train our models really fast hence reducing time complexity of the task.

✅ Using DevMesh: This is a platform that provides us with a space to showcase our work and share our work with many other developers present in the network. This helps us connect with other developers, learn from them and expand our networking.

Our Work:

https://github.com/NASS2023/Brain2Text