Integrating Arhat deep learning framework with Intel oneDNN library and OpenVINO toolkit

Introduction

Arhat is a cross-platform framework designed for efficient deployment of deep learning inference workflows in the cloud and at the edge. Unlike the conventional deep learning frameworks Arhat translates neural network descriptions directly into lean standalone executable code.

In developing Arhat, we pursued two principal objectives:

- providing a unified platform-agnostic approach towards deep learning deployment and

- facilitating performance evaluation of various platforms on a common basis

Arhat provides interoperability with Intel deep learning software including oneDNN library and OpenVINO toolkit.

In this article we discuss design and architecture of Arhat and demonstrate its use for deployment of object detection models for embedded applications.

Challenges of deep learning deployment

Arhat addresses engineering challenges of modern deep learning caused by high fragmentation of its hardware and software ecosystems. This fragmentation makes deployment of each deep learning model a substantial engineering project and often requires using cumbersome software stacks.

Currently, the deep learning ecosystems represent a heterogeneous collection of various software, hardware, and exchange formats, which include:

- training software frameworks that produce trained models,

- software frameworks designed specifically for inference,

- exchange formats for neural networks,

- computing hardware platforms from different vendors,

- platform-specific low level programming tools and libraries.

Training frameworks (like TensorFlow, PyTorch, or CNTK) are specialized in construction and training of deep learning models.

These frameworks do not necessarily provide the most efficient way for inference in production, therefore there are also frameworks designed specifically for inference (like OpenVINO, TensorRT, MIGraphX, or ONNX runtime), which are frequently optimized for hardware of specific vendors.

Various exchange formats (like ONNX, NNEF, TensorFlow Lite, OpenVINO IR, or UFF) are designed for porting pre-trained models between the software frameworks. Various vendors provide computing hardware (like CPU, GPU, or specialized AI accelerators) optimized for deep learning workflows. Since deep learning is very computationally intensive, this hardware typically has various accelerator capabilities.

To utilize this hardware efficiently, there exist various platform-specific low level programming tools and libraries (like Intel oneAPI/oneDNN, NVIDIA CUDA/cuDNN, or AMD HIP/MIOpen).

All these components are evolving at a very rapid pace. Compatibility and interoperability of individual components is frequently limited. There is a clear need for a streamlined approach for navigating in this complex world.

Benchmarking of deep learning performance represents a separate challenge. At present, teams of highly skilled software engineers are busy with tuning of a few popular models (ResNet50 and BERT are the favorites) to squeeze the last bits of performance from their computing platforms. However, the end users frequently want to evaluate performance of models of their own choice. Such users request methods for achieving the best inference performance on the chosen platforms as well as interpreting and comparing the benchmarking results. Furthermore, they typically have the limited time and budget and might not have in their disposition a dedicated team of deep learning experts. Apparently, this task presents some interesting challenges requiring a dedicated set of tools and technologies.

Reference architecture

To address these challenges, we have developed Arhat. The main idea behind it is quite simple: what if we can generate executable code individually for each combination of a model and a target platform? Obviously, this will yield a rather compact code and can greatly simplify the deployment process.

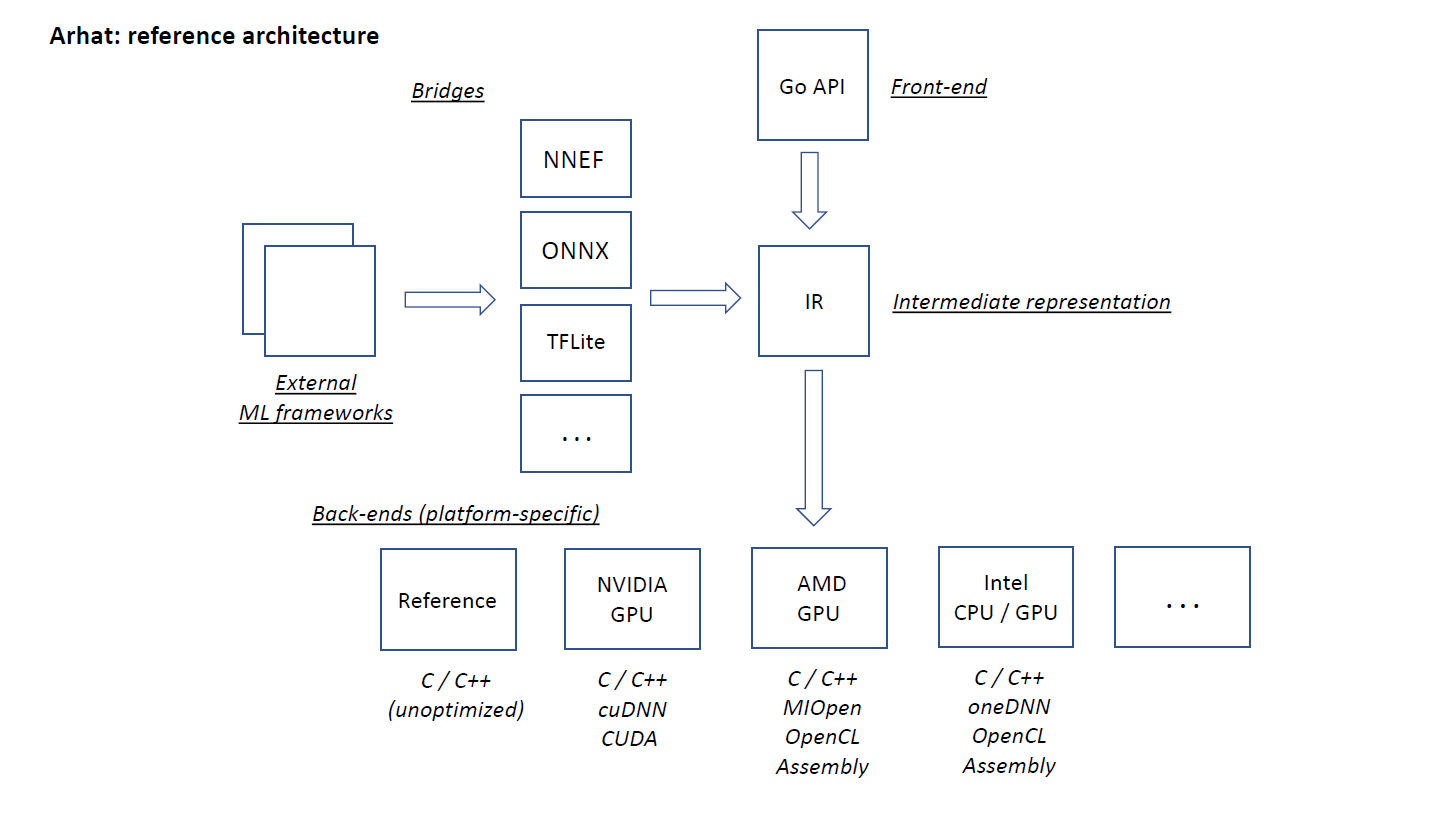

Conceptual Arhat architecture as originally designed is shown on the following figure.

The central component is Arhat engine that receives model descriptions from various sources and steers back-ends generating the platform-specific code. There are two principal ways for obtaining model descriptions:

- Arhat provides the front-end API for programmatic description of models.

- Pre-trained models can be imported from the external frameworks via bridge components supporting various exchange formats.

The interchangeable back-ends generate code for various target platforms.

This architecture is extensible and provides regular means for adding support for new model layer types, exchange formats, and target platforms.

Arhat core is implemented in pure Go programming language. Therefore, unlike most conventional platforms that use Python, we use Go also as a language for the front-end API. This API specifies models by combining tensor operators selected from an extensible and steadily growing operator catalog. The API defines two levels of model specification. The higher level is common for all platforms and workflows. The lower level is platform- and workflow-specific. The Arhat engine performs automated conversion from the higher to the lower level.

oneDNN back-end

Intel oneAPI Deep Neural Network Library (oneDNN) is a cross-platform library implementing basic deep learning operations optimized for various Intel platforms.

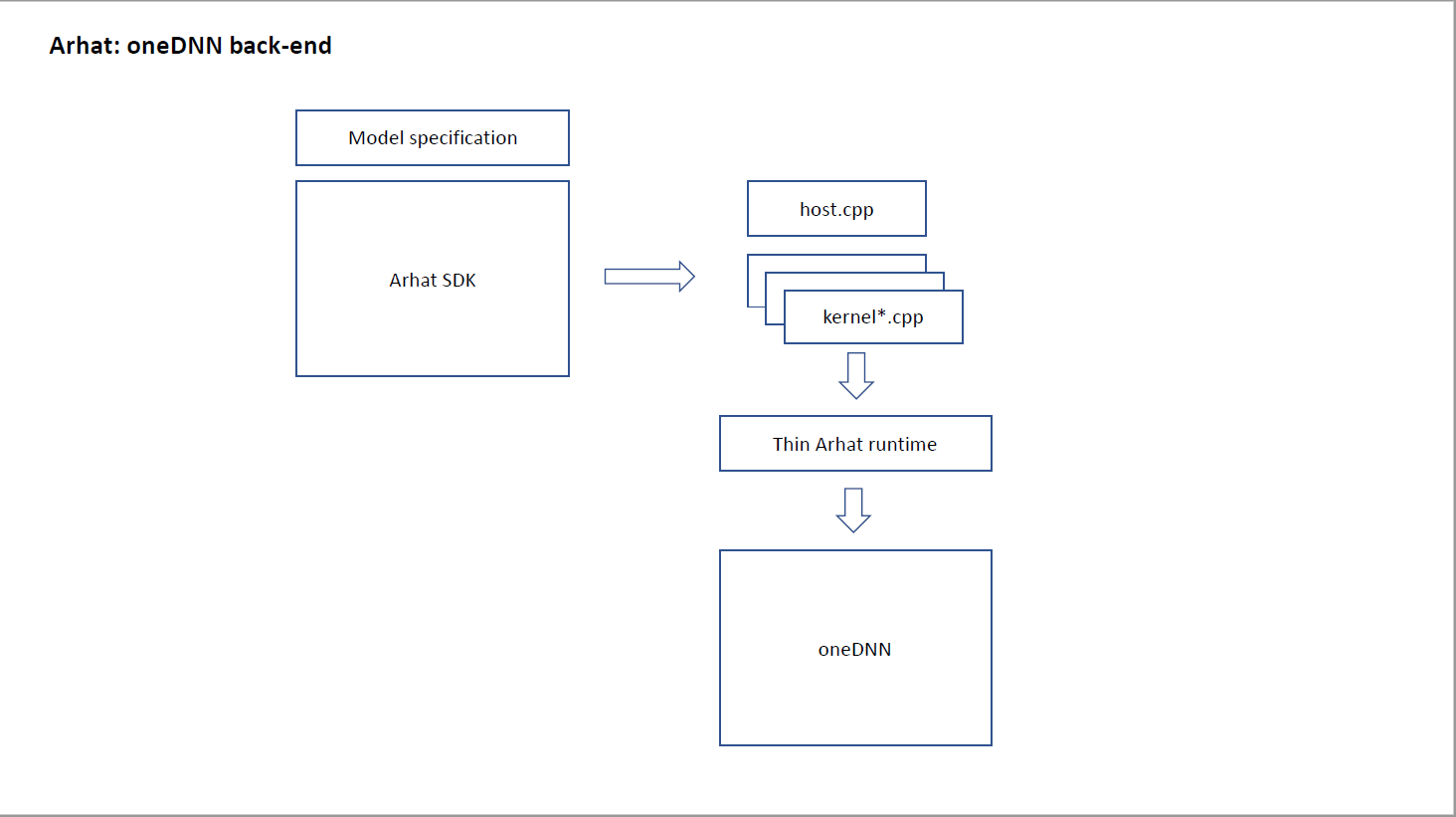

Arhat supports Intel hardware via oneDNN back-end. The architecture of this back-end is shown on the following figure.

The back-end translates model specification into C++ code consisting of one host module and multiple kernel modules. This code runs on the top of a thin Arhat runtime that directly interacts with oneDNN. This approach results in a very slim deployable software stack that can run on any Intel hardware supporting oneDNN.

Initial proof of concept: image classification

As initial proof of concept, we have ported the entire Python torchvision image classification library to Arhat Go API and used Arhat to generate code for three target platforms (Intel, NVIDIA, and AMD). All the models demonstrated consistent results on all platforms.

This experiment has shown that Arhat can support an extensive collection of convolution networks of various families. Also, Go has proven an excellent language for description of neural networks.

The Go code for all models is open source and available on GitHub.

Interoperability with OpenVINO

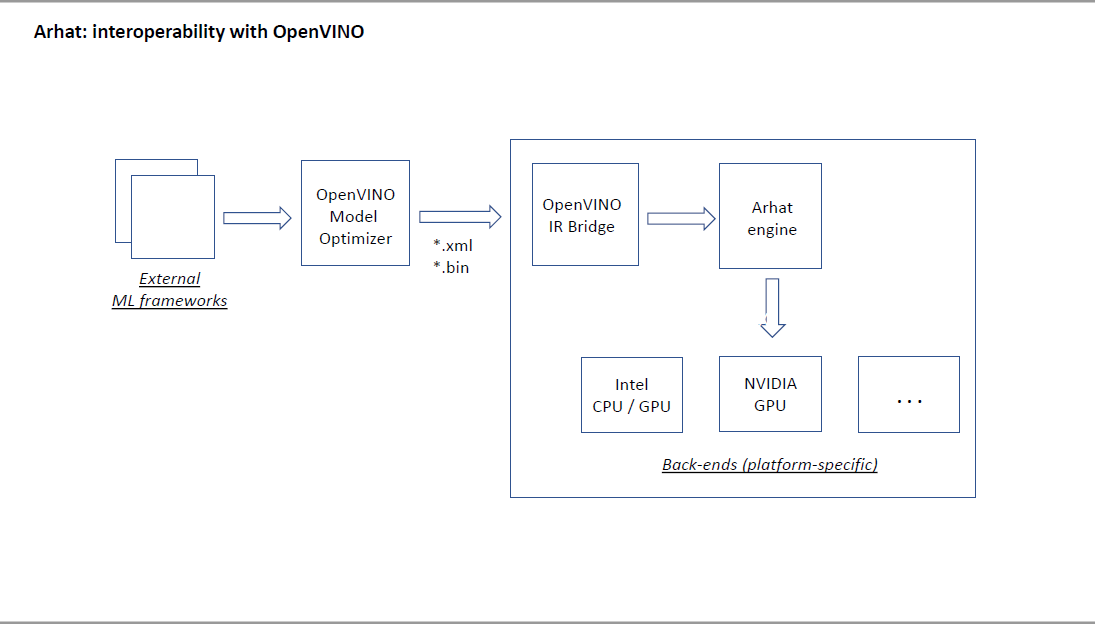

Intel OpenVINO toolkit allows developers to deploy pre-trained models on various Intel hardware platforms. It includes the Model Optimizer tool that converts pre-trained models from various popular formats to the uniform Intermediate Representation (IR).

We leverage OpenVINO Model Optimizer for supporting various model representation formats in Arhat. For this purpose, we have designed the OpenVINO IR bridge that can import models produced by the OpenVINO Model Optimizer. This immediately enables Arhat to handle all model formats supported by OpenVINO.

The respective workflow is shown on the following figure.

This development, on the one hand, integrates Arhat into Intel deep learning ecosystem and, on the other hand, makes OpenVINO capable of the native inference on platforms of different vendors. With Arhat, the end-users can view OpenVINO as a vendor-neutral solution. This might be beneficial for adoption of OpenVINO as an inference platform of choice.

Case study: object detection

We have validated the approach using as a case study object detection on embedded platforms. For this study we have selected 8 representative pre-trained models of 3 different families (SSD, Faster R-CNN, and YOLO) from OpenVINO Model Zoo. These models include:

SSD:

- ssd_inception_v1_fpn_coco (640 x 640)

- ssd_inception_v2_coco (300 x 300)

- ssd_resnet50_v1_fpn_codo (640 x 640)

Faster R-CNN:

- faster_rcnn_mobilenet_v2_coco (600 x 1024)

- faster_rcnn_resnet50_coco (600 x 1024)

YOLO:

- yolo_v2_tf (608 x 608)

- yolo_v3_tf (416 x 416)

- yolo_v4_tf (608 x 608)

(The input image sizes are shown in parentheses.)

We used Arhat to generate code for Intel and NVIDIA and evaluated it on the respective embedded platforms.

We started with performance comparison of Arhat code powered by oneDNN with the open source version of OpenVINO.

As a platform we used Tiger Lake i7-1185G7E, a system combining 4 CPU cores with integrated GPU. To obtain stable results and emulate embedded environment we have disabled turbo boost. We have measured average processing time for one image. Separate test runs have been made for the CPU cores and the GPU. As a baseline, we also included metrics for the ResNet50 image classification network.

Results for the CPU and the GPU are summarized in the following tables. The numbers represent time, in milliseconds, required to process one image.

[CPU]

Model Arhat OpenVINO

------------------------------------------------------

resnet50-v1-7 23.5 23.0

ssd_mobilenet_v1_fpn_coco 397 401

ssd_mobilenet_v2 14.7 14.9

ssd_resnet50_v1_fpn_coco 563 568

faster_rcnn_inception_v2_coco 244 236

faster_rcnn_resnet50_coco 722 719

yolo_v2_tf 188 190

yolo_v3_tf 205 201

yolo_v4_tf 409 427

[GPU]

Model Arhat OpenVINO

------------------------------------------------------

resnet50-v1-7 10.1 11.3

ssd_mobilenet_v1_fpn_coco 144 125

ssd_mobilenet_v2 9.0 10.7

ssd_resnet50_v1_fpn_coco 195 183

faster_rcnn_inception_v2_coco 117 82.8

faster_rcnn_resnet50_coco 252 259

yolo_v2_tf 64.4 60.8

yolo_v3_tf 72.3 68.2

yolo_v4_tf 140 135

Initial comparison with Jetson Xavier NX

As the next step, we included the NVIDIA platform in evaluation. We have chosen Jetson Xavier NX. This system has 6 ARM CPU cores and a GPU. It has various configurable power modes with 10W and 15W TDP envelopes and 2, 4, or 6 cores enabled. The best performance is demonstrated with 15W and 2 cores and we used this mode for comparison.

Results are summarized in the following table.

Model Arhat OpenVINO Arhat/cuDNN

Tiger Lake Tiger Lake Xavier NX

-----------------------------------------------------------------------

resnet50-v1-7 10.1 11.3 26.7

ssd_mobilenet_v1_fpn_coco 144 125 212

ssd_mobilenet_v2 9.0 10.7 23.4

ssd_resnet50_v1_fpn_coco 195 183 305

faster_rcnn_inception_v2_coco 117 82.8 195

faster_rcnn_resnet50_coco 252 259 413

yolo_v2_tf 64.4 60.8 114

yolo_v3_tf 72.3 68.2 139

yolo_v4_tf 140 135 250

This table shows a substantial performance gap between two systems. This raises a question whether the Arhat cuDNN back-end can really get the best results on NVIDIA and the comparison is fair.

For NVIDIA GPUs, the ultimate inference tool is TensorRT and it might be a good idea to include it in the evaluation. However, for doing this, we had to find a way for running OpenVINO models on TensorRT.

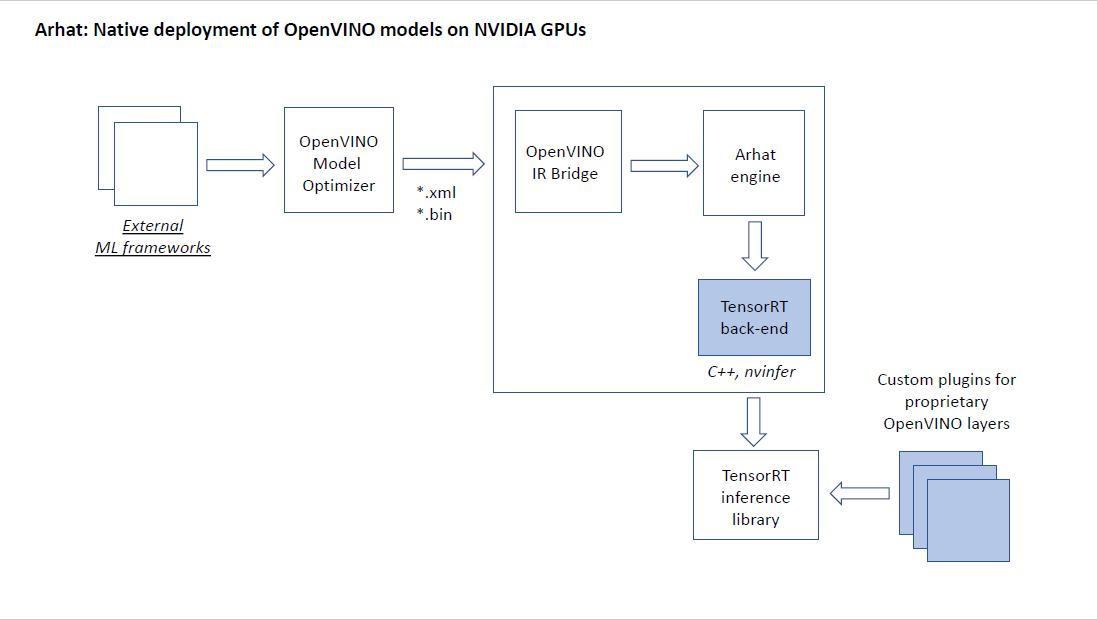

Interoperability between OpenVINO and TensorRT

To run OpenVINO models on TensorRT we have implemented a specialized Arhat back-end generating code that calls TensorRT C++ inference library instead of cuDNN.

The respective workflow is shown on the following figure.

There are several OpenVINO proprietary layer types that are not directly supported by TensorRT. We have implemented them in CUDA as custom TensorRT plugins. These layer types include:

- DetectionOutput

- PriorBoxClustered

- Proposal

- RegionYolo

- RoiPooling

Arhat back-end for TensorRT enables native deployment of OpenVINO models on NVIDIA GPUs and opens a way for achieving the best OpenVINO performance on any hardware.

Performance evaluation, revisited

We repeated benchmarking using the TensorRT back-end. Results are summarized in the following table.

Model Arhat OpenVINO Arhat/cuDNN Arhat/TensorRT

Tiger Lake Tiger Lake Xavier NX Xavier NX

-----------------------------------------------------------------------------------

resnet50-v1-7 10.1 11.3 26.7 14.2

ssd_mobilenet_v1_fpn_coco 144 125 212 187

ssd_mobilenet_v2 9.0 10.7 23.4 12.3

ssd_resnet50_v1_fpn_coco 195 183 305 248

faster_rcnn_inception_v2_coco 117 82.8 195 159

faster_rcnn_resnet50_coco 252 259 413 366

yolo_v2_tf 64.4 60.8 114 83.3

yolo_v3_tf 72.3 68.2 139 94.8

yolo_v4_tf 140 135 250 191

Conclusion

This study demonstrated that Arhat can efficiently interoperate with various key components of Intel and NVIDIA deep learning ecosystems. Furthermore, using Arhat allows to overcome limitations of each such component and achieve results that is difficult or not possible to achieve otherwise. Arhat extends capabilities of Intel deep learning software by providing a way for the native deployment of OpenVINO models on the wider range of platforms.

Arhat can be also used for the streamlined on-demand benchmarking of models on various platforms. Using Arhat for performance evaluation eliminates overhead that might be caused by external deep learning frameworks because code generated by Arhat directly interacts with the optimized platform-specific deep learning libraries.