How To Build And Run Your Modern Parallel Code In C++17 and OpenMP 4.5 Library On NVIDIA GPUs

Before We Begin…

At the beginning of 2020, Intel Corporation deployed the first pre-release of the Intel® oneAPI™ Toolkit for heterogeneous computing.

Intel® oneAPI™ Toolkit offers the revolutionary Data Parallel C (DPC) compiler and a widespread of Intel’s high-performance libraries and tools for delivering a parallel code in C++17 and offloading its execution to the various of hardware acceleration targets, such as Intel® CPUs, GPUs and FPGAs, without re-implementing the code for each specific hardware acceleration platform, itself.

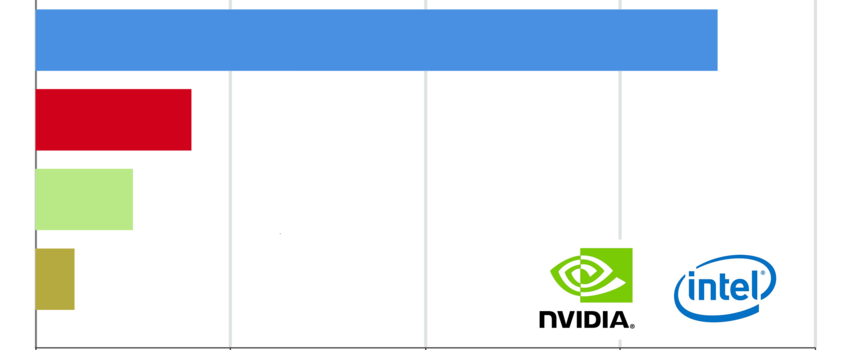

Presently, there are two main high-performance libraries, empowered by Intel, that allow the developers to deliver a parallel code in C++ and Python for the Intel’s unified heterogeneous compute platform (xCPU):

• LLVM/Clang-10 OpenMP 4.5/5.0 Library With Offloading Capabilities:

Intel® Xeon® Phi™ Co-processors;

Nvidia® GeForce™ GPGPUs;

• oneAPI Toolkit (DPC++) OpenCL/SYCL Wrapper Library:

Intel® Core™, Intel® Xeon® CPUs;

Intel® UHD Graphics Neo;

Intel® PAC Platform FPGAs / ARRIA 10 GX;

Specifically, the latest versions of the new DPC compiler, released as a part of Intel® oneAPI™ Toolkit, are mainly based on the LLVM-Clang v.10.0.0 project’s distribution (https://www.llvm.org/), rather than the other compilers, including Intel® C Compiler or GNU GCC-C. Unlike the other C/C compilers, the DPC/Clang provides the offloading capabilities, making it possible to build the same universal C++ code for the variety of existing hardware platforms, and, then, execute it on more than one hardware acceleration target, respectively.

Initially, the using of the Intel’s DPC compiler allowed to build and run the code not only for Intel’s heterogeneous platform, but also for the hardware accelerators manufactured by the other vendors, such Nvidia® GPUs. However, the Nvidia GPUs support was soon deprecated. Instead, the latest versions of the Intel® oneAPI™ Toolkit allows to compile and run the CL/SYCL kernels on Nvidia GPUs by migrating the specific code using DPC Compatibility Tool and specific APIs, providing the Nvidia CUDA backend for those CL/SYCL kernels to be executed.

In this blog, I will thoroughly discuss how to migrate your C++ parallel code back to the Intel’s LLVM-Clang OpenMP 4.5/5.0 library, offloading its execution to Nvidia GPUs acceleration target.

There are two basic open-source LLVM/Clang-10 project’s staging distributions developed by Intel, that were created and contributed to GitHub prior to the initial release of Intel® oneAPI™ Toolkit:

• https://github.com/intel/llvm - Intel staging area for llvm.org contribution. Home for Intel LLVM-based projects;

• https://github.com/llvm/llvm-project - The LLVM Project is a collection of modular and reusable compiler and toolchain technologies.

In this blog we will build and configure the LLVM/Clang-10.0.0 compiler with offloading capabilities by using the sources downloaded from the second LLVM project’s repository.

Hardware Requirements

To setup the development environment, all that we need is a Intel® Core™, Intel® Xeon® CPU @ 3.6 Ghz CPUs – based local development machine with the one more multiple Nvidia GeForce GPUs installed and SLI x2/ SLI x3 enabled. Please, notice that the following approach being discussed will not work in case when using virtual machines with the Hyper-V, VMware, VirtualBox or Qemu generic virtual graphics card installed.

Software Requirement

The approach discussed in this blog works only for the local development machine with Ubuntu Desktop 18.04.4 Bionic Beaver x86_64 installed. Unfortunately, we cannot use the current LLVM/Clang-10.0.0 distribution release in the Microsoft Windows environment. Also, it is not necessary to update/downgrade the Ubuntu 18.04.4 kernel, compiling it from sources, since the latest version 5.4.0 of the Linux x86_64 kernel is already fully supported.

Installing Prerequisites

After we’ve installed the Ubuntu Desktop 18.04.4 on the physical local development machine, our goal is to install the prerequisite packages first. To do that we must use the following command from the Linux bash-console with root administrative privileges elevated:

sudo apt install -y build-essential cmake libelf-dev libffi-dev pkg-config ninja-build git

In this case we have a need to install the required build toolchain that includes GNU GCC-C++ compiler, MAKE/CMAKE utilities and development libraries, the using of which is necessary for compiling the LLVM/Clang project.

Downloading And Installing Nvidia Accelerated Graphics Driver

The next step is to install the latest Nvidia graphics driver for the graphics cards being installed on the development machine. Before doing that, we also need to disable and blacklist the standard Nouveau graphics driver installed during the Ubuntu Desktop 18.04 setup:****

sudo bash -c "echo blacklist nouveau >> /etc/modprobe.d/blacklist-nvidia-nouveau.conf"

sudo bash -c "echo options nouveau modeset=0 >> /etc/modprobe.d/blacklist-nvidia-nouveau.conf"

sudo update-initramfs -u

To disable the Nouveau driver we need to blacklist it, re-build the current Ubuntu’s initramfs image by using the commands listed above, and reboot the development machine.

Since the Nouveau driver has been permanently disabled, now we can download and install Nvidia Graphics Driver:

• https://www.nvidia.com/en-us/geforce/drivers/

After downloading the Nvidia Graphics Driver we must run the installation file by using the following command:

sudo sh ./nvidia/NVIDIA-Linux-x86_64-440.100.run –silent

In fact, the Nvidia CUDA Development Toolkit already includes the installation of the Nvidia Graphics Driver, but, anyway, it’s strongly recommended to install the driver separately and then install Nvidia CUDA development tools only. Finally, after the graphics driver installation we must reboot the development machine once again in order the changes to take an effect.

**Downloading And Installing Nvidia CUDA Development Toolkit

Similarly, we have a need to install Nvidia CUDA Development Toolkit to provide the Nvidia GPU compute capabilities:

• https://developer.nvidia.com/cuda-downloads

Specifically, we need to download Nvidia CUDA Toolkit v.10.1.0, since the latest versions of CUDA are not supported by the LLVM/Clang project.

After downloading the specific installation file, we must invoke the following command to install CUDA Toolkit:

sudo sh ./nvidia/cuda_10.1.243_418.87.00_linux.run --silent --override --run-nvidia-xconfig –toolkit

We normally run the CUDA installation in silent mode, reconfiguring the Ubuntu Xorg 11 and overriding the re-compiling of the default libraries, when the kernel image is updated. After that, we must reboot the development machine to apply the configuration changes made.

Building And Configuring LLVM/Clang Compiler v.10.0.0

At this point, all that we have to do first is to create a directory to which the LLVM/Clang compiler binaries will be installed. Just to make it simple, I will create this sub-directory in the /root directory of the Linux hard drive:

**sudo mkdir /root/llvm

To ensure that the LLVM/Clang compiler will be properly built from sources, we must declare two environment variables:

**export LLVM_SRC=$PWD/llvm-10.0.0.src

export LLVM_PATH=/root/llvm

**

The first variable $LLVM_SRC specifies the path to the directory where the LLVM/Clang sources are located and the second one $LLVM_PATH – the directory where the already built LLVM/Clang compiler binaries will be located.

After setting the environment variables, we must download the LLVM/Clang project by cloning it from the GitHub repository by using the following commands:

**sudo git clone https://github.com/llvm/llvm-project.git llvm-10.0.0.src

sudo cd $LLVM_SRC && git checkout -b release/10.x origin/release/10.x

**

After the project’s hive is successfully downloaded from the repository, we now can create an empty temporary directory for the CLANG/LLVM binaries built and launch the compilation process by using the following commands:

**sudo mkdir llvm_build && cd llvm_build

sudo cmake -DCLANG_OPENMP_NVPTX_DEFAULT_ARCH=sm_60 -DLIBOMPTARGET_NVPTX_COMPUTE_CAPABILITIES=37,60,70 -DLLVM_ENABLE_PROJECTS="clang;clang-tools-extra;libcxx;libcxxabi;lld;openmp" -DCMAKE_BUILD_TYPE=RELEASE -DCMAKE_INSTALL_PREFIX=$LLVM_PATH -DCMAKE_C_COMPILER=gcc -DCMAKE_CXX_COMPILER=g++ $LLVM_SRC/llvm

**

As you’ve probably noticed, to build the LLVM/Clang project binaries, we use the cmake and ninja commands previously installed, rather than the conventional GCC make utility.

After the LLVM/Clang project was pre-configured by using the commands listed above, we must build the binary executables by using the following command:

**export TMPDIR=/dev/shm

sudo make -j $(nproc) && make install -j $(nproc)

**

The first command in chain, builds the LLVM/Clang compiler’s executables, while the second command installs the entire LLVM/Clang compiler to the system by copying all necessary files built to the proper location on disk (e.g. /root/llvm).

After executing these commands, the LLVM/Clang compiler installation is normally completed.

Finally, the last installation step is to set the required environment variables to make sure that LLVM/Clang compiler will function properly:

**sudo echo "export PATH=$LLVM_PATH:$LLVM_PATH/bin:$PATH" >> /root/.bashrc

sudo echo "export LD_LIBRARY_PATH=$LLVM_PATH/libexec:$LD_LIBRARY_PATH" >> /root/.bashrc

sudo echo "export LD_LIBRARY_PATH=$LLVM_PATH/lib:$LD_LIBRARY_PATH" >> /root/.bashrc

sudo echo "export LIBRARY_PATH=$LLVM_PATH/libexec:$LIBRARY_PATH" >> /root/.bashrc

sudo echo "export LIBRARY_PATH=$LLVM_PATH/lib:$LIBRARY_PATH" >> /root/.bashrc

sudo echo "export MANPATH=$LLVM_PATH/share/man:$MANPATH" >> /root/.bashrc

sudo echo "export C_INCLUDE_PATH=$LLVM_PATH/include:$C_INCLUDE_PATH" >> /root/.bashrc

sudo echo "export CPLUS_INCLUDE_PATH=$LLVM_PATH/include:CPLUS_INCLUDE_PATH" >> /root/.bashrc

**sudo echo "export CLANG_VERSION=10.0.0" >> /root/.bashrc

sudo echo "export CLANG_PATH=$LLVM_PATH" >> /root/.bashrc

**sudo echo "export CLANG_BIN=$LLVM_PATH/bin" >> /root/.bashrc

sudo echo "export CLANG_LIB=$LLVM_PATH/libexport CLANG_VERSION=10.0.0" >> /root/.bashrc

sudo echo "export CLANG_PATH=$LLVM_PATH" >> /root/.bashrc

sudo echo "export CLANG_BIN=$LLVM_PATH/bin" >> /root/.bashrc

sudo echo "export CLANG_LIB=$LLVM_PATH/lib" >> /root/.bashrc

This is typically done by appending the following lines to the /root/.bashrc file.

After the entire installation process is completed, we must finally reboot the development machine and then use the clang/clang++ compiler. To verify that the compiler has been properly installed, we must use the following command at the bash-console:

**clang –version

clang++ --version

**

Building And Running Code In C++17 On Nvidia GPUs

To make sure that everything is working just fine, let’s build and execute the following C++17 OpenMP code, executing on the Nvidia GPU:

simple_gpu.c:

#include <stdio.h>

#include <omp.h>

const size_t N = 1000000000L;

int main()

{

#pragma omp target teams distribute parallel for

for (size_t i = 0; i < N; i++)

{

for (size_t j = 0; j < N / 100; j++) { ... }

}

return 0;

}

To build the following code we must use the command shown below:

clang -o simple_gpu -fopenmp -fopenmp-targets=nvptx64-nvidia-cuda simple_gpu.c

When the compilation of this code is completed we will need to run it by using the following command in the bash-console:

./simple_gpu

After the program has been launched, we can also verify if the code is executed on the Nvidia GPU. To do that we must open another bash-console and run the following command:

watch -n 0.1 nvidia-smi

After invoking this command the table with Nvidia graphics card information is displayed. To make sure that the program is executed entirely on the GPU, we must monitor GPU utilization parameter displayed and its value has reached 100% percent, which indicates that the GPU is busy with the following program execution.

Acknowledgements

The approach introduced in this blog was also briefly discussed in https://freecompilercamp.org/llvm-openmp-build/ manual.